Blog

The Hybrid Workforce is Here: What CX Leaders Must Get Right in 2026

AI is no longer just a productivity layer in the contact center — it’s becoming part of the workforce itself. In this preview of our upcoming webinar, we explore what the “hybrid workforce” really means for CX leaders in 2026, how human and AI agents will work together at scale, and what organizations must get right now to design, govern, and measure this new operating model.

How Cresta Scales Data Annotation With a Human-Supervised Multi-Agent System (MAS)

How do you scale high-quality data annotation without sacrificing rigor? In this post, we explore our multi-agent approach—combining multiple LLM annotators, structured deliberation, and human oversight—to replicate the discipline of expert human workflows at enterprise scale.

Talk to Your Dashboards: How Cresta AI Analyst Unlocks Smarter Insights

This blog explores why traditional BI tools fall short for modern CX leaders — and how AI-powered conversation intelligence turns static dashboards into real-time answers, helping teams move from “what happened” to “why” in minutes, not weeks.

Crafting a Natural-Sounding AI Voice

Go behind the scenes of Signal, Cresta’s AI concierge, and explore the technical foundations that power its conversational experience.

Why P&C Insurers Are Turning to AI Agents for FNOL and Claims Support

How AI agents transform insurance claims by improving FNOL intake, reducing call volume, and delivering faster, clearer experiences when policyholder trust is on the line.

From Strategy to Scale: Introducing New Leaders Scaling Cresta’s Partner Ecosystem

As enterprise AI adoption accelerates, partnerships matter more than ever. In this post, Cresta introduces new partnership leaders Ben Evans and Vanya Jakovljevic—and shares how we’re investing in our partner ecosystem to help customers move from AI pilots to real impact.

Peak-Demand Excellence: How AI Agents Elevate Service for Telcos and Utilities

Telcos and utilities face relentless volume spikes during outages, billing cycles, and policy changes—when customers need fast, accurate answers most. This blog explores how AI agents can absorb predictable, high-volume interactions, and where automation delivers the greatest impact while preserving seamless escalation to human agents.

How CVS Health Went From Scoring 5% of Calls to 100% with AI-powered Conversation Intelligence

CVS Health shares how it is accelerating customer experience impact with AI—achieving immediate reductions in after-call work, unlocking predictive CSAT across 100% of calls, and dramatically improving time to insight.

Evaluating AI Voices – What Does It Mean to Sound “Good”?

"Your AI Agent doesn't sound good"—this critique is perhaps the deepest dread for anyone building a voice agent.

When Every Word Matters: Engineering Real-Time Multilingual Intelligence for Human Conversations

This technical deep dive unpacks the architectural decisions, latency optimizations, and model evaluation frameworks behind our Real-Time Translator (RTT)—showing how language detection, transcription, translation, and speech synthesis work together to enable seamless global communication.

Introducing Cresta’s Agent Operations Center: A Unified Command Center for Human and AI Agents

Cresta’s Agent Operations Center is the unified command hub for supervising human and AI agents in real time. Monitor live conversations, guide interactions instantly, and ensure automation delivers accurate, compliant, and on-brand experiences at scale.

How We Built a State-of-the-Art Research Agent for Call Center Conversation Analytics

Take a deep dive into how we built Cresta AI Analyst, and all of the lessons learned along the way.

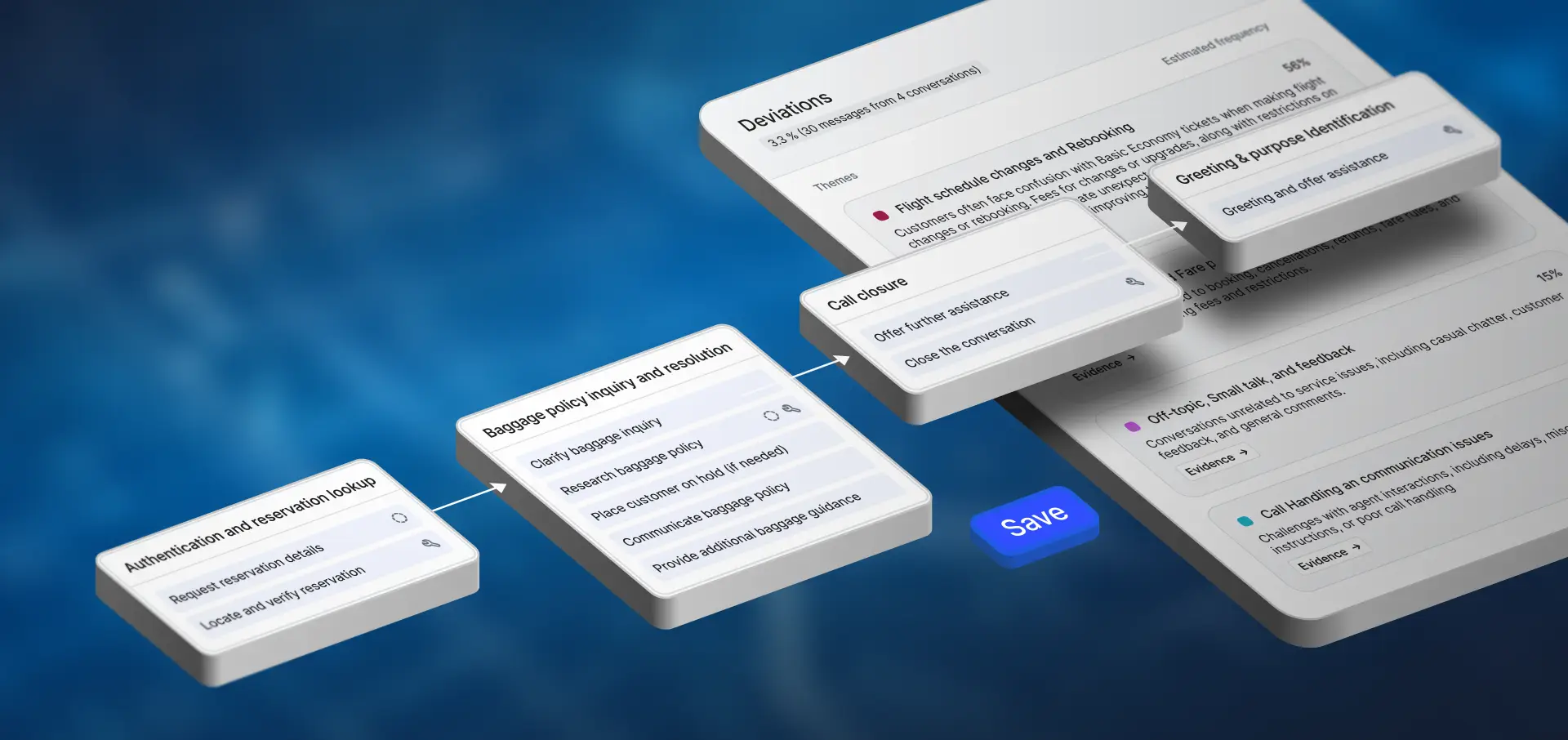

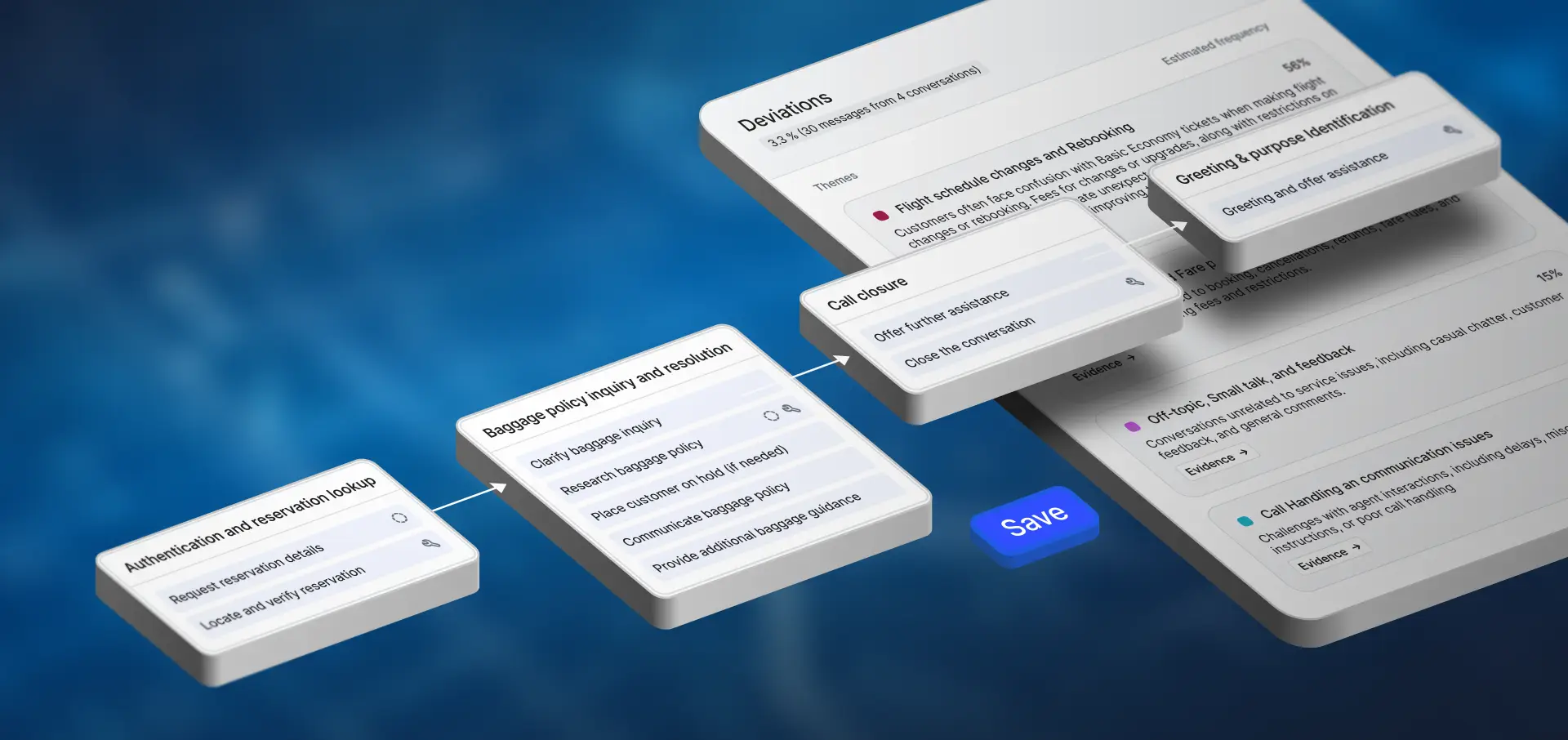

Introducing Automation Discovery: Build the Blueprint for CX Automation

A data-driven blueprint for automation and workflow improvement—showing what to automate, how conversations work today, and where to improve CX.

Building and Deploying Production‑Grade AI Agents: Cresta’s End‑to‑End Approach

In this post, we walk through Cresta’s full lifecycle for building and deploying production-grade AI agents.

No Blog posts match your search

Try changing your search terms.

Ready to see the results for yourself?

See the unified platform for human and AI agents in action with a personalized demo