Blog

Introducing Cresta’s Agent Operations Center: A Unified Command Center for Human and AI Agents

Cresta’s Agent Operations Center is the unified command hub for supervising human and AI agents in real time. Monitor live conversations, guide interactions instantly, and ensure automation delivers accurate, compliant, and on-brand experiences at scale.

Why P&C Insurers Are Turning to AI Agents for FNOL and Claims Support

How AI agents transform insurance claims by improving FNOL intake, reducing call volume, and delivering faster, clearer experiences when policyholder trust is on the line.

From Strategy to Scale: Introducing New Leaders Scaling Cresta’s Partner Ecosystem

As enterprise AI adoption accelerates, partnerships matter more than ever. In this post, Cresta introduces new partnership leaders Ben Evans and Vanya Jakovljevic—and shares how we’re investing in our partner ecosystem to help customers move from AI pilots to real impact.

Experts Predict: How AI Will Continue to Reshape Customer Experience in 2026

Industry leaders and Cresta experts share their predictions on how AI will reshape customer experience in 2026—and what CX leaders should prepare for next.

Peak-Demand Excellence: How AI Agents Elevate Service for Telcos and Utilities

Telcos and utilities face relentless volume spikes during outages, billing cycles, and policy changes—when customers need fast, accurate answers most. This blog explores how AI agents can absorb predictable, high-volume interactions, and where automation delivers the greatest impact while preserving seamless escalation to human agents.

How CVS Health Went From Scoring 5% of Calls to 100% with AI-powered Conversation Intelligence

CVS Health shares how it is accelerating customer experience impact with AI—achieving immediate reductions in after-call work, unlocking predictive CSAT across 100% of calls, and dramatically improving time to insight.

Evaluating AI Voices – What Does It Mean to Sound “Good”?

"Your AI Agent doesn't sound good"—this critique is perhaps the deepest dread for anyone building a voice agent.

When Every Word Matters: Engineering Real-Time Multilingual Intelligence for Human Conversations

This technical deep dive unpacks the architectural decisions, latency optimizations, and model evaluation frameworks behind our Real-Time Translator (RTT)—showing how language detection, transcription, translation, and speech synthesis work together to enable seamless global communication.

How We Built a State-of-the-Art Research Agent for Call Center Conversation Analytics

Take a deep dive into how we built Cresta AI Analyst, and all of the lessons learned along the way.

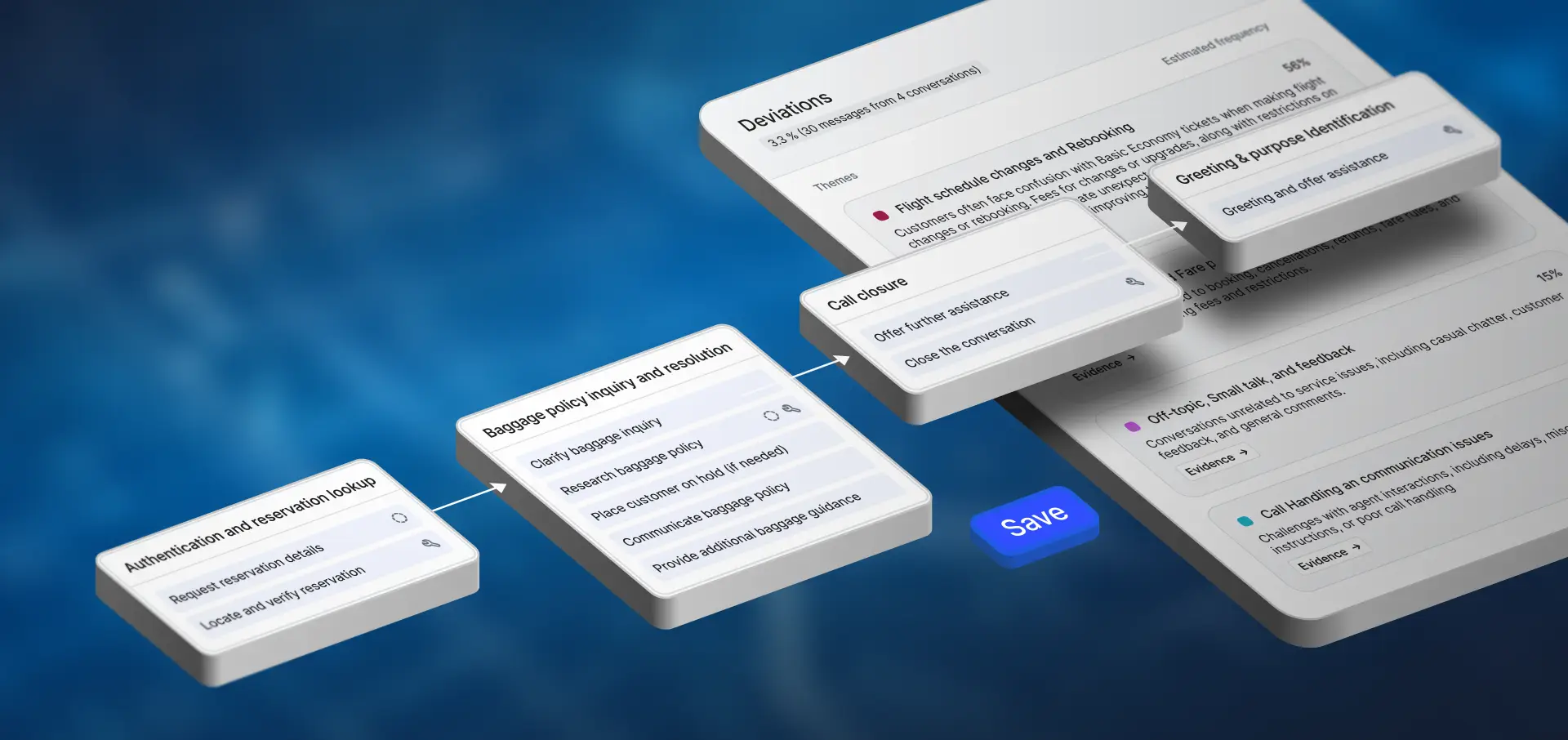

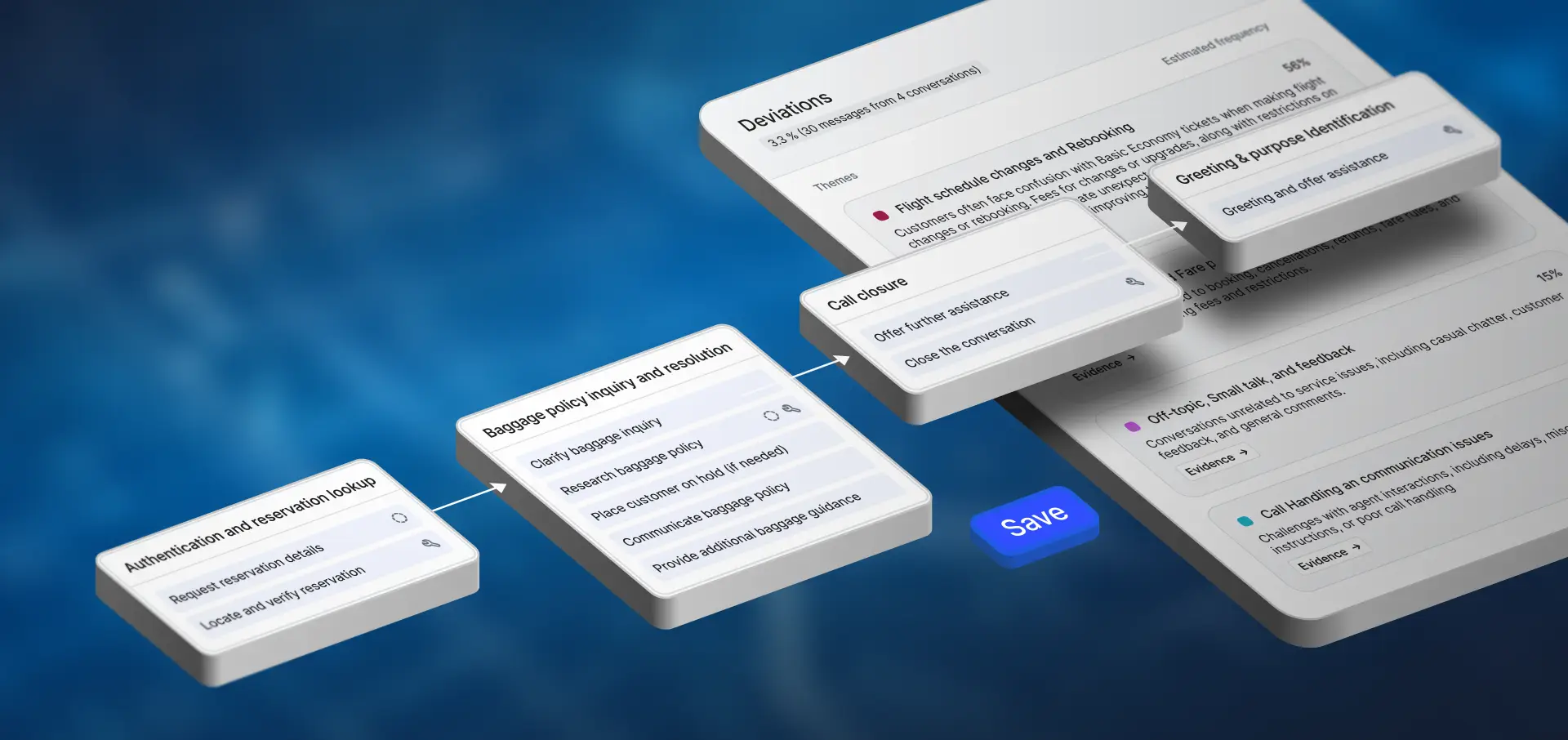

Introducing Automation Discovery: Build the Blueprint for CX Automation

A data-driven blueprint for automation and workflow improvement—showing what to automate, how conversations work today, and where to improve CX.

Building and Deploying Production‑Grade AI Agents: Cresta’s End‑to‑End Approach

In this post, we walk through Cresta’s full lifecycle for building and deploying production-grade AI agents.

A New Wave in AI-Driven CX: Inside Cresta’s First-Ever WAVE

Cresta’s inaugural WAVE 2025 united AI and CX leaders for three days of innovation, insight, and connection — from breakthrough launches to on-field fun at AT&T Stadium.

Cresta Expands Global Reach with Real-Time Voice Translation and Multilingual AI Across the Platform

Cresta’s latest launch makes global CX effortless—with real-time voice translation, multilingual AI, and insights across every language.

Beyond Automation: How Cresta’s New Innovations Give Businesses Control and Confidence

Cresta WAVE 2025 has kicked off, bringing together AI and CX leaders for three days of innovation, connection, and breakthrough product announcements.

Meet Signal: Cresta’s AI Agent Powering a New Kind of Website Experience

Meet Signal, Cresta’s new AI concierge for our website. Signal transforms the website experience into an intelligent, conversational journey—answering questions with cited sources, switching seamlessly between chat and voice, and even connecting visitors to demos or sales.

No Blog posts match your search

Try changing your search terms.

Ready to see the results for yourself?

See the unified platform for human and AI agents in action with a personalized demo