Cresta’s voice platform is a cutting-edge solution designed to provide real-time insights and actionable intelligence during customer interactions. It integrates with a wide variety of Contact Center as a Service (CCaaS) platforms, capturing and processing live audio streams to assist agents with timely guidance and recommendations. To shed light on the technology behind our voice platform, we’ve divided our exploration into a three-part series:

Last week, we published part one: Handling Incoming Traffic with Customer-Specific Subdomains.

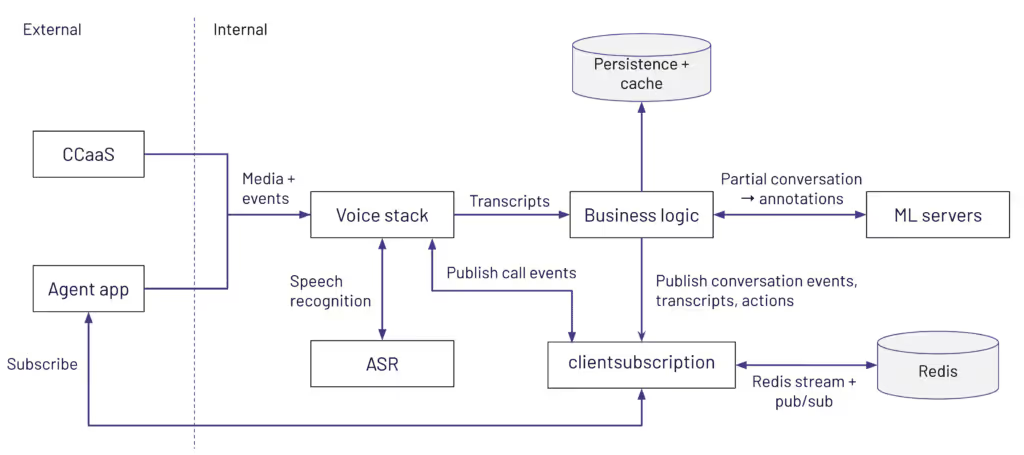

Part two, today's blog, will focus on how the voice platform processes live audio streams through its voice stack and how business logic layers power real-time guidance for agents. This part will highlight key components like speech recognition and how conversation data flows through the system.

Next week, stay tuned for part three: ML Services, Inference Graphs, and Real-Time Intelligence. The final installment will take you deeper into the machine learning (ML) stack, exploring how inference graphs orchestrate model workflows, how customer-specific policies influence ML processing, and how Cresta delivers actionable insights in real-time.

Voice platform glossary

Going forward, we want to detail a bit some domain or Cresta-specific terms and components used throughout this article:

- CCaaS — Contact Center as a Service - cloud based solution that provides contact center functionality, such as call handling, customer support and communication management. Examples include Amazon Connect, 8x8, Five9, etc. Cresta currently supports over 20 CCaaS integrations.

- Agent app — the application that is installed on the agent's desktop to provide real-time conversational intelligence. Gets information while the call is ongoing (like transcript) and other information like actions, checklists, etc. For CCaaS platforms that don’t provide audio streams, the Agent app is also responsible for capturing the audio on the client side and delivering it to the voice stack.

- gowalter — internal Cresta service. Responsible for handling incoming audio and media events, audio PII redaction and storage.

- ASR — Automatic Speech Recognition system.

- apiservice — internal Cresta service. Mainly responsible for persisting transcripts and Agent app notifications for various events, like transcript updates and call updates (call ended).

- orchestrator —* internal Cresta service orchestrating the calls to the ML Stack

Media Sources

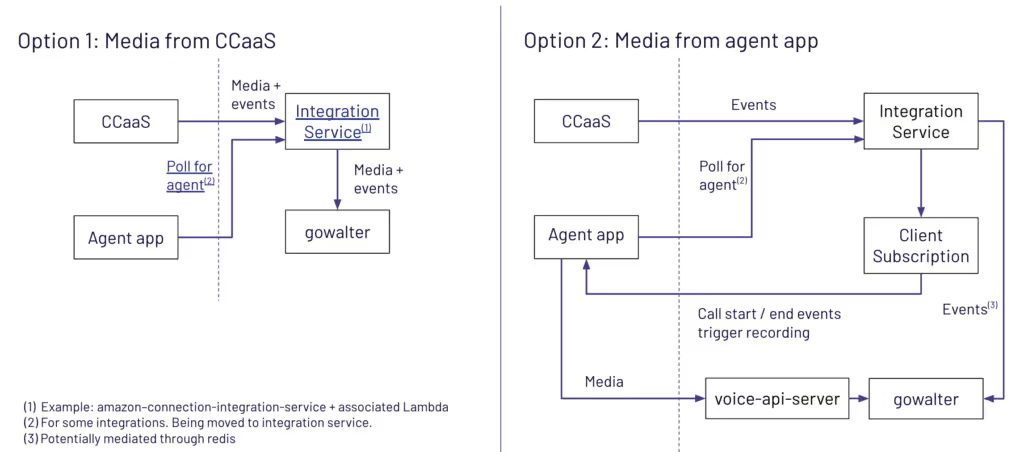

The audio and events can start from two different sources, depending on the customer’s implementation:

- CCaaS can provide the audio and events (call started, call ended).

- The Agent app provides the audio directly from the agent’s desktop when it is not available from the CCaaS.

ASR

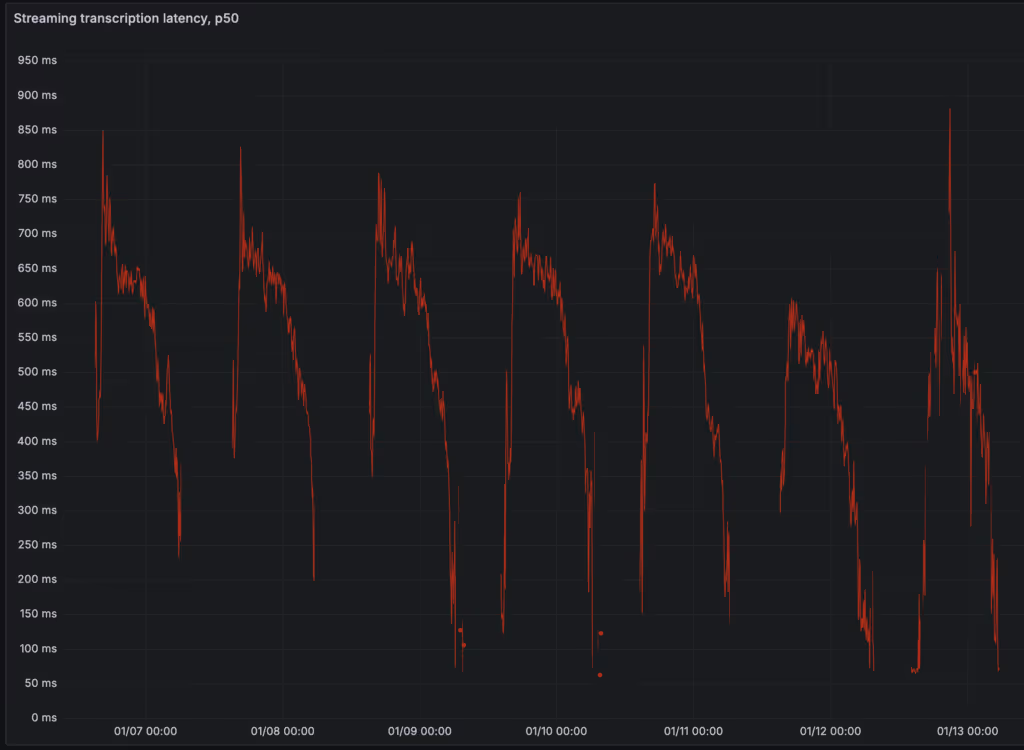

gowalter acts as the entry point in the pipeline, receiving audio from conversations. The audio chunks sent to the ASR system are usually between 20-100ms, and the ASR system returns partial transcripts roughly every 0.5-1.5 seconds. This is highly dependent on daily traffic volume for each customer, as seen in the graph below.

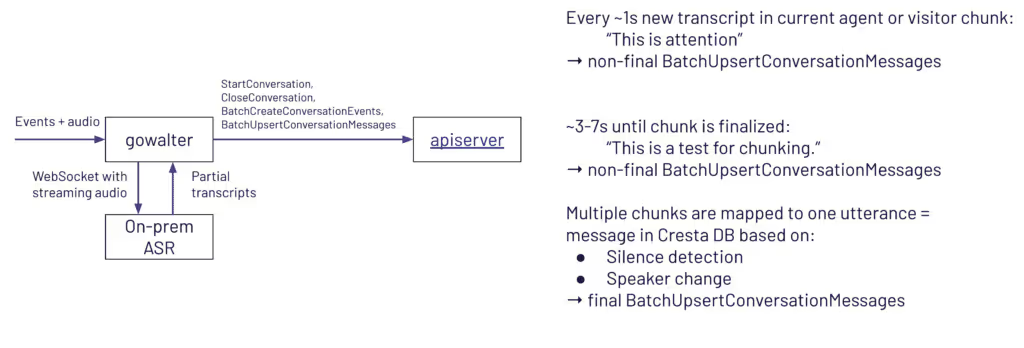

If the WebSocket to the ASR is cut-off, we have a recovery mechanism in gowalter to replay audio and ensure that no data is lost. The partial transcripts generated by the ASR system are then upserted into the system through apiserver.

Why upsert?

For ASR, real-time transcription and post-processing refinements work together to provide accurate results. Initially, the ASR generates transcripts based on the audio received so far. These early transcripts—called partial transcripts—are provisional and may change as more context from subsequent audio chunks becomes available.

For example, Deepgram might initially transcribe “I want to book a fight” but later correct this to “I want to book a flight” after analyzing the rest of the sentence. Similarly, phonetic ambiguities or incomplete sentences often lead to partial results being refined when context resolves them.

ASR typically finalizes chunks every 3-7 seconds during real-time transcription. However, from gowalter's perspective, these finalized chunks do not represent a complete conversation message. Instead, gowalter processes these chunks and organizes them into what we call “utterances”, which are stored as messages in the Cresta database.

An utterance is a higher-level grouping of one or more finalized chunks, typically determined by natural conversation boundaries, such as:

- Silence: A pause of sufficient length, indicating a break in the flow of speech.

- Speaker Changes: A shift from one participant (e.g., agent) to another (e.g., user) in the conversation.

By grouping multiple chunks into utterances, we ensure that the structure of the conversation is preserved in a way that aligns with how humans naturally segment speech. This also helps downstream systems, like the ML Services, to analyze and annotate conversations more effectively.

The apiserver is a critical part of the overall functionality, as it allows both partial and final transcripts to be persisted to our Postgres database.

Business logic

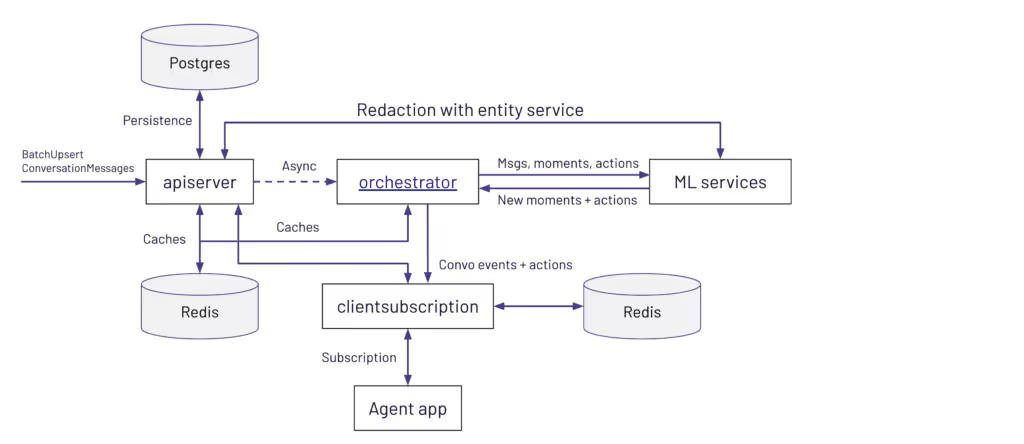

After the conversation messages are persisted, the apiserver asynchronously notifies the orchestrator service, which coordinates all the ML logic with the ML Services. The orchestrator acts as the information hub in the business logic layer, managing both:

- Fan-out communication with multiple ML Services to process data and generate new annotations.

- Notifications to the Agent app via clientsubscription for real-time updates on the conversation.

To obtain new annotations from the ML Services, the orchestrator sends the current conversation data along with previous annotations, which include both moments and actions:

- Moment annotations: markers added to the conversation for detections, generation, or policy definitions. Examples: intent detection, keyword detection, or policy checks (e.g., “should do X” or “did not do X”).

- Action annotations: visible outputs or actionable items surfaced to the user, such as hints or suggestions

Both apiserver and orchestrator send RPCs to the clientsubscription service (using redis streams) to notify the Agent app. The Agent app subscribes to events for the current signed-in agent, like “new call started”, real-time transcript updates, hints or actions and end-of call summarization.For example:

- The Agent app will register to be notified about new suggestions or hints generated for a conversation. When the ML Services determine any new actions, orchestrator will notify via clientsubscription, ensuring low latency and real-time visibility.

- The Agent app will register to be notified about transcripts for a conversation. apiserver will emit new events whenever there are new transcripts from gowalter, with roughly one update on the transcript per second (both final and partial transcripts), as latency is critical, for the agent to always see the latest transcribed audio.

Next article, we’re going to be taking a look at the ML Services. Stay tuned.