The technology behind AI agents is evolving at breakneck speed, unlocking massive potential, but in the process also fueling a wave of vendors, hype, and flashy demos. In a landscape dominated by hyper-realistic voices, it’s easy to think that realism is all that matters. But that assumption is a trap—one that can lead to real-world deployment failures and a voice experience that falls far short of expectations.

While realism and production-readiness aren’t mutually exclusive, the most cutting-edge, hyper-realistic models often lag behind when it comes to key production requirements, like clarity, stability, low latency, and emotional control at scale. Audio traits such as timbre, speed, articulation, pitch, and tone are just one part of a much broader equation.

In this installment of Cresta IQ, we evaluated today’s voice AI landscape on different vendors’ realism vs. their production readiness. What trade-offs do enterprise leaders face when choosing the right voice model for real-world deployment? How do they make an informed decision that’s best for their business and will actually scale?

Drawing from anonymized benchmarking data, deployment insights, and real-world testing including significant production traffic, we examine why sounding human isn’t good enough, and what actually matters when voice AI goes live.

The Voice AI Illusion: Why Demos Can Be Deceiving

It’s easy to make a great-sounding demo. It’s much harder to reproduce that experience in production across millions of customer conversations. In some cases, it’s not just hard, it’s technically impossible, given the current limitations of voice AI models.

We’ve seen this firsthand with voice-to-voice (V2V) models. On paper, these models eliminate the need for traditional text-to-speech (TTS), delivering ultra-low latency and highly expressive audio. But in practice, both of these models can fall short in critical ways:

- Stability is limited — responses can vary unpredictably, making it difficult to enforce compliance or consistency.

- Tuning options are minimal — customizing rhythm and emotion to align with brand guidelines remains nascent at best.

- Lack of compliance support — major V2V providers do not currently meet HIPAA or similar regulatory standards.

- Accents and recognition issues — inconsistent behavior with regional speech patterns.

- Much higher operational cost — making them prohibitively expensive at scale.

Even for more mature TTS systems, reality introduces friction that demos can’t reveal. Streaming audio in a browser at 16+ kHz or higher sounds clean and impressive, but once that audio is piped through a phone line at 8 kHz, fidelity can significantly decline. Add in customer barge-ins, background noise, and real-time load balancing, and the voice you thought was ready quickly starts to fall apart.

That’s why voice reliability is the real north star:

- Accuracy: Pronunciations, especially of names, jargon, or domain-specific terms, must be correct every time to maintain trust and prevent confusion.

- Scalability: The voice must hold up across millions of interactions without degrading in quality or responsiveness, even under heavy concurrent load.

- Latency: Responses need to be fast—typically under 250ms—to avoid awkward pauses and preserve natural conversation flow.

- Naturalness: Speech should sound fluid, human, and emotionally appropriate across diverse customer contexts and use cases.

A voice that works in every customer scenario, not just in a controlled demo, is the only voice worth trusting.

Testing Voice AI at Scale

To evaluate whether leading TTS providers are truly enterprise-ready, we used a comprehensive testing methodology grounded in real-world conditions. We measured real-time latency, assessed pronunciation accuracy, including emotion, spelling, and heteronym handling, and ran blind transcription comparisons to eliminate subjective bias.

Human-in-the-loop quality assurance (QA) ensures that each voice is reviewed for clarity, tone, and performance across a range of very common and high-stakes scenarios like escalations or interruptions. Critically, we also observed how models behave in production environments, via real-time API integration, versus in playground demos, where conditions don’t reflect real-world limitations. We tested using real APIs, live latency paths, and call simulation, ensuring that what you hear in production is in line with what end customers will experience.

Finally, we stack-ranked providers using anonymized scoring across multiple contact center scenarios, allowing us to identify which voices truly deliver at scale, and which ones only sound good in a sandbox.

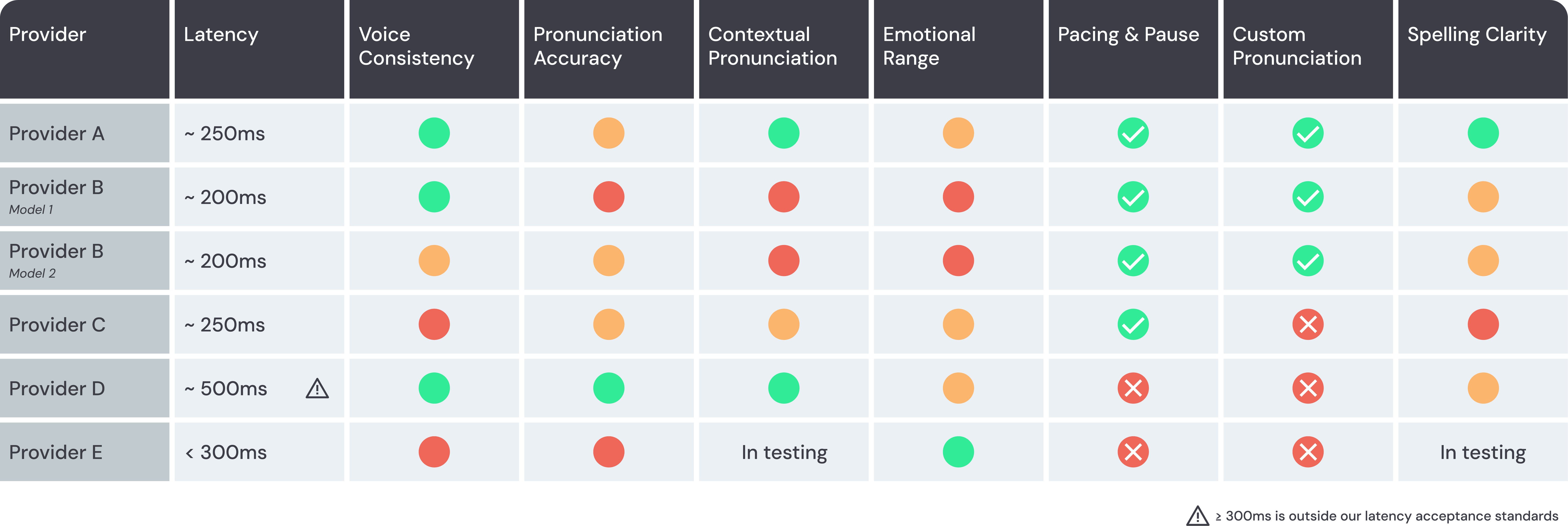

Our scoring focused on these critical dimensions:

Latency: Lower than 250ms (including both model inference time and network latency) is ideal for responsive, human-like conversation. Measured as time-to-first-byte (TTFB). Low latency ensures the AI agent responds in real time, maintaining a natural conversation flow.

Voice Consistency: The consistency of (TTS) audio quality and behavior across different utterances and sessions. Unstable voices may vary in tone, pacing, or pronunciation, breaking the illusion of a consistent agent persona. This can also include distortions or unnatural sounds.

Pronunciation Accuracy: How correctly the TTS engine pronounces words, especially uncommon names, acronyms, or technical terms. Mispronunciations reduce credibility and create confusion.

Contextual Pronunciation: The TTS engine’s ability to correctly pronounce heteronyms (words spelled the same but pronounced differently depending on context). TTS must use context to pick the right pronunciation.

Emotional Range: The voice’s ability to convey different emotions through tone, such as warmth, calm, confidence, or urgency. Emotionally appropriate responses meet the customer where they are, building rapport and trust.

Pacing & Pausing: A break in speech used to mimic natural conversation flow, improve clarity, or emphasize meaning. Properly timed pauses make the agent easier to understand and feel more thoughtful.

Custom Pronunciation: The ability to manually control how specific words are pronounced, often using SSML or phonetic markup. This ensures brand names, jargon, and customer names are spoken correctly.

Spelling Naturalness: How naturally and clearly the voice spells out items when needed, such as confirmation codes or acronyms. Spelled responses must sound smooth and be easily understood.

Production Feasibility: The voice provider’s ability to support reliable, real-world deployment at enterprise scale. This includes API stability, integration support, latency consistency, and operational uptime, all critical for ensuring the AI agent performs as expected in production environments.

It also requires adherence to key security and compliance standards—such as HIPAA, SOC 2, and GDPR—to ensure the voice experience meets regulatory and organizational requirements from day one

Here’s what the data shows: there’s no one-size-fits-all voice model. Vendors that sound impressive in demos (showcasing emotional range) often come with tradeoffs, like latency, stability, or limited tuning options.

The best models aren’t just the most ‘realistic’, they strike the right balance of clarity, control, performance, and brand fit.

What Leaders Should Be Asking

So what makes a voice truly enterprise-ready? We recommend leaders look for vendors with models that can offer

Must-haves:

- Predictable sub-250ms latency

- Consistent pronunciation across edge cases

- Scalable APIs with uptime SLAs

- Stability, or a lack of glitches, distortions, or unnatural sounds

Nice-to-haves:

- Natural emotional variation and tone control

- Strong pacing and spelling clarity

For a thorough evaluation of voice AI vendors, pursue answers to the following key questions:

Will it work in my environment - not just in a web demo?

- What does the voice sound like over the phone?

- What is the latency under high load?

- Can our QA and compliance workflows integrate with this provider?

How customizable is the voice experience to our brand?

- Can we tune speed, pitch, emotional tone, pacing, pronunciation, and rhythm?

- Can the voice be customized for different use cases? If not, can I select a suitable voice from a library of options?

Can we test and QA the voice?

- Are there tools to test and preview voice behavior before going live?

Will this scale across millions of calls without degrading?

- Does this model have production deployments?

- How does this voice model hold up in real-world, production environments?

- How is performance monitored and tuned?

- What’s the voice vendor’s uptime, latency SLA, and track record?

From Voice Model to Voice Experience: Why Design Still Matters

Selecting a strong voice model is only the beginning. The real differentiation comes from the strategic AI agent partner you choose and their approach to engineering, design, and deployment. While many vendors may license the same base voices, few know how to turn those voices into enterprise-ready, on-brand experiences, or, more critically, to ensure what’s demoed is actually deliverable in production. At Cresta, we only showcase what we can ship, and we advise leaders to be wary of vendors who don’t hold themselves to the same standard.

Much of what makes a voice work in the real world happens before a single word is spoken. Half the impact comes from how the AI agent is prompted: how it’s designed to speak, what tone it uses, and how it adapts under pressure. That prompt design defines the personality, rhythm, and clarity of the experience. It’s what shapes the content the voice reads aloud, and it matters just as much as vendor selection.

Cresta’s engineering team ensures real-time responsiveness by tuning for latency, interruption handling, end-of-utterance detection, and background noise management. We pair this with a carefully crafted design layer—expressive prompting, pacing, emphasis, and tone modulation—all tailored for contact center moments. These aren’t features of the TTS model; they’re the result of deep operational know-how, applied consistently across use cases. Our tooling supports ongoing iteration, testing, and QA to ensure every voice feels natural, intentional, and on-brand before it ever goes live.

The result? A voice that doesn’t just sound human—but performs like a trusted extension of your business.

Voice AI That Earns Trust, Not Just Applause

Demos that dazzle are around every corner, but contact centers need something more: stability, tunability, and performance through high-stakes situations.

The right voice is the one your customers can rely on. That means engineering, testing, and optimizing every detail – not just picking the most expressive model off the shelf.

Because in the end, the most important voice isn’t the one that sounds the most human in a demo. It’s the one your customers will trust, understand, and respond to.