Enterprises are racing to bring AI agents into their customer interactions, but one critical challenge continues to stand in the way: trust.

KPMG reports that only 46% of people globally are willing to trust AI systems, a trend that naturally finds itself mirrored in customers’ interactions with businesses.

Industry research further demonstrates that the stakes are high: according to PwC’s 2025 AI Agent Survey, only 25% of senior leaders trust an AI agent to act autonomously in customer interactions.

Why? AI Agents don’t always behave in predictable ways. The way they reason, respond and take action can vary significantly depending on context, phrasing, and the twists and turns of a real-world conversation.

Without rigorous testing and validation, even the most advanced AI agents can introduce costly errors, compliance risks, and reputational damage.

That’s why we’re excited to announce major enhancements to Cresta’s Automated AI Agent Testing suite, giving enterprises the confidence to deploy safely, scale faster, and deliver exceptional customer experiences at every stage of the AI agent lifecycle.

Confidence Built In, Not Assumed

The message is clear: trust in AI must be earned through disciplined, transparent testing.

Cresta’s Automated AI Agent Testing does exactly that, by combining expert-aligned LLM judges, realistic customer simulations, reusable conversation evaluators, and in-product feedback loops into one integrated suite.

The result? Every release is validated, reliable, and ready for customers.

With these innovations, enterprises no longer need to piece together fragmented QA frameworks or rely on slow, manual testing. Cresta provides confidence in every conversation, by checking policy conformance, workflow adherence, and factuality at scale, before every AI Agent deployment or update.

What’s New in Automated AI Agent Testing

This release introduces several breakthrough capabilities:

Expert-aligned LLM Judges

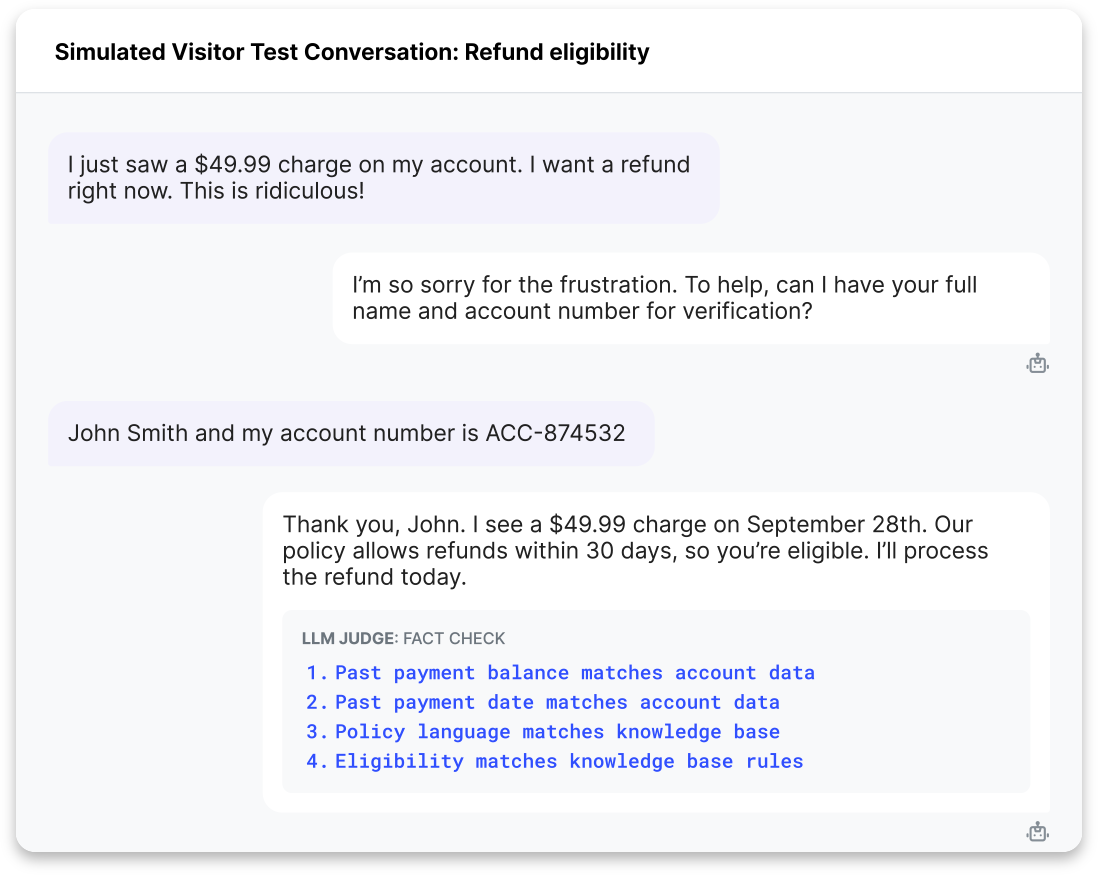

Cresta’s judges deliver reliable, human-like evaluations of what AI Agents say and do in different scenarios, considering context and nuance when assessing accuracy, precision, and faithfulness to correct processes and workflows.

For example, a “Hallucination Detection” judge ensures the AI Agent's answers come from approved sources, not made-up information. A “Golden Response” judge checks whether the AI Agent’s answer is semantically equivalent to the approved or expected response.

The LLM judges are fully aligned, calibrated and validated against expert consensus and informed by Cresta's deep contact center experience. They reflect enterprise standards across accuracy, effectiveness, workflow adherence, and more. Unlike single-preference judges that capture one person's subjective view, fully aligned judges are more robust, providing the nuance and reliability needed to evaluate complex conversations at scale. This makes them a powerful safeguard for enterprises, helping ensure AI agents not only resolve issues, but do so in line with business standards.

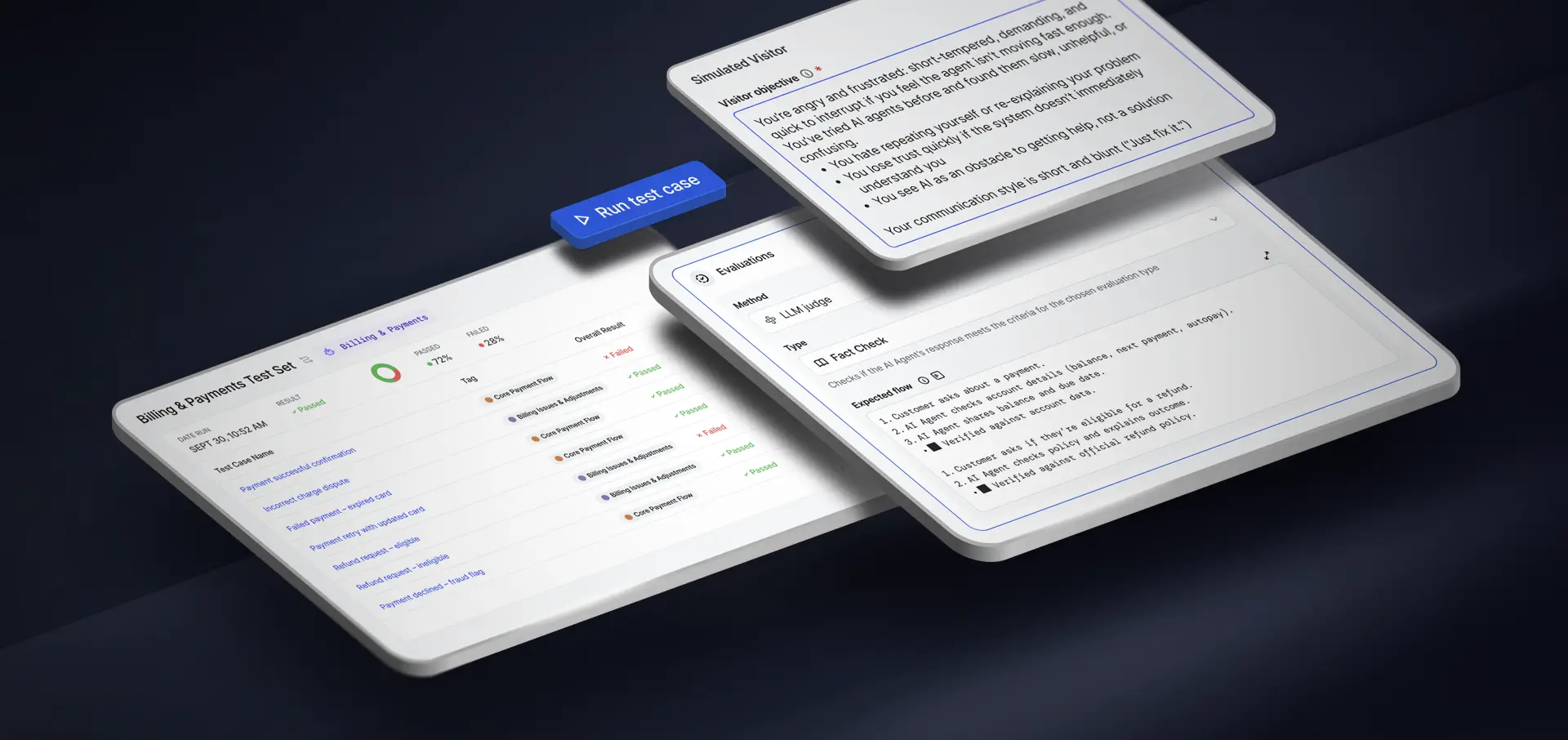

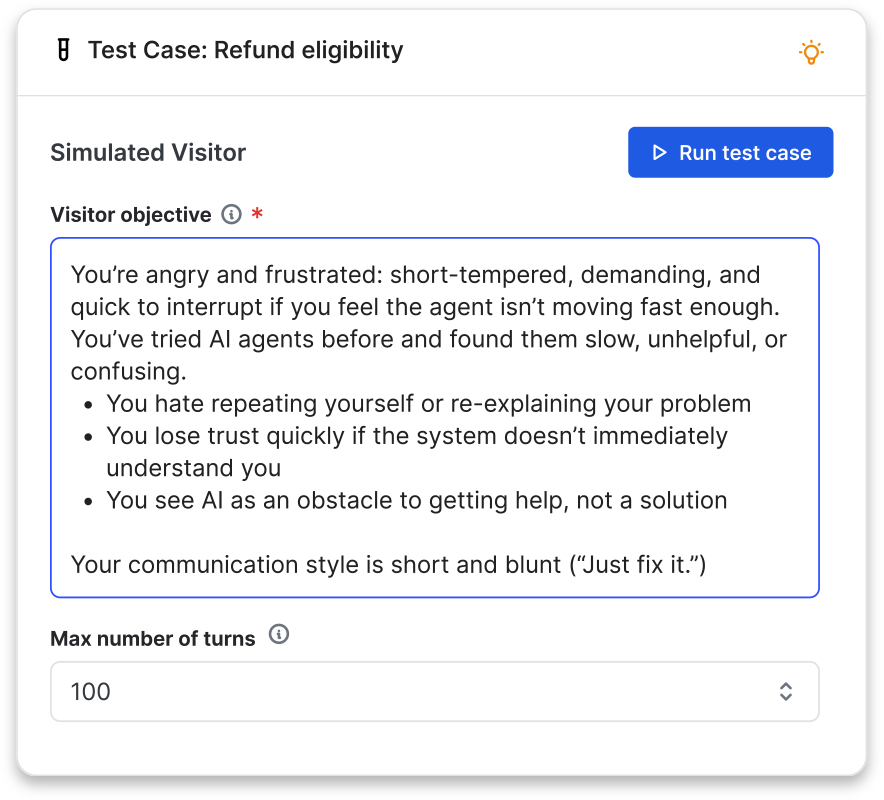

Simulations

With simulations, AI-powered “virtual customers” stress-test agents across a diverse set of realistic scenarios. Informed by millions of real conversations, these simulations reflect a deep understanding of how people actually communicate, producing virtual customers that behave with authentic realism.

By surfacing edge cases and unpredictable customer behaviors, enterprises are able to see how the AI Agent responds to diverse, real-world scenarios before deployment. If the AI Agent behaves unexpectedly, the tester can see where the breakdown occurred and adjust accordingly. This iterative process helps create more resilient AI Agents that perform reliably across both expected and unexpected customer interactions.

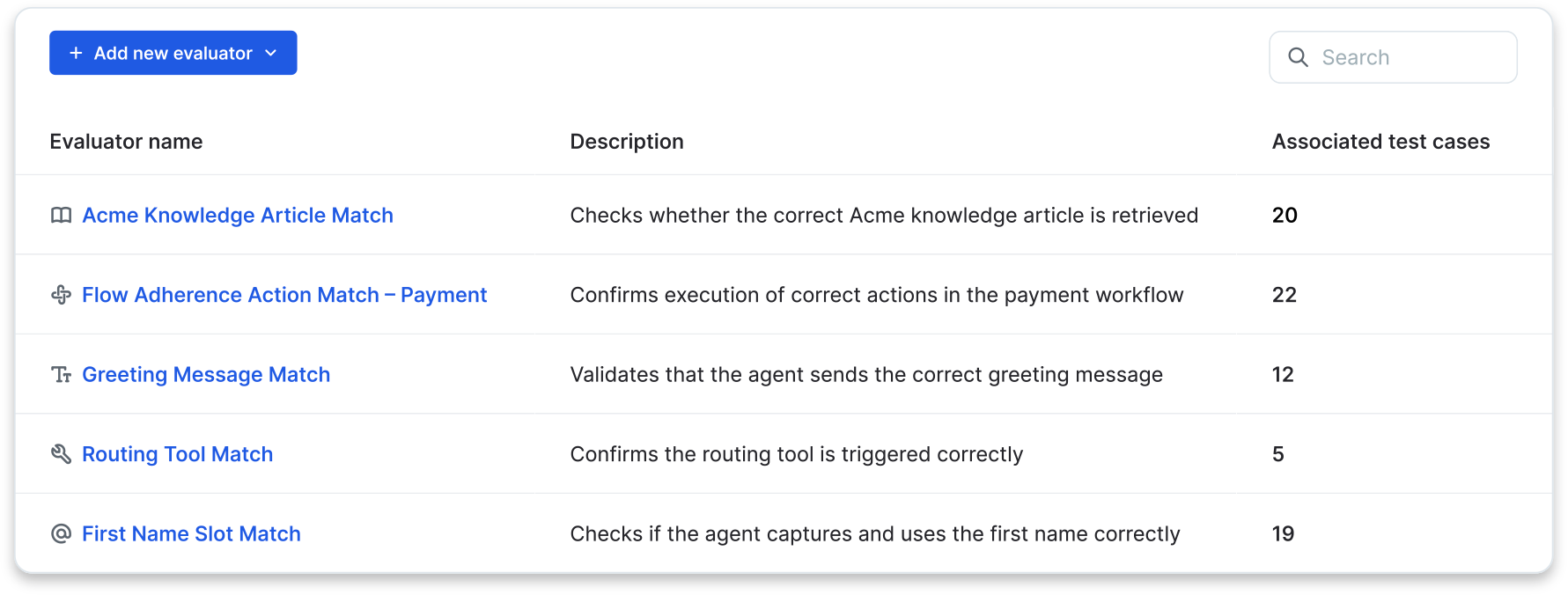

Dynamic and Reusable Evaluators

Modular evaluators let teams test AI Agents with either logic-based checks for precise rules (e.g., keyword matching, correct article retrieval, expected action) or expert-aligned LLM judges for nuanced evaluations (e.g., Hallucination Detection, Golden Response, Flow Adherence). Used together, they provide the flexibility and accuracy enterprises need to reduce errors, enforce consistency, and scale with confidence across scenarios and over time.

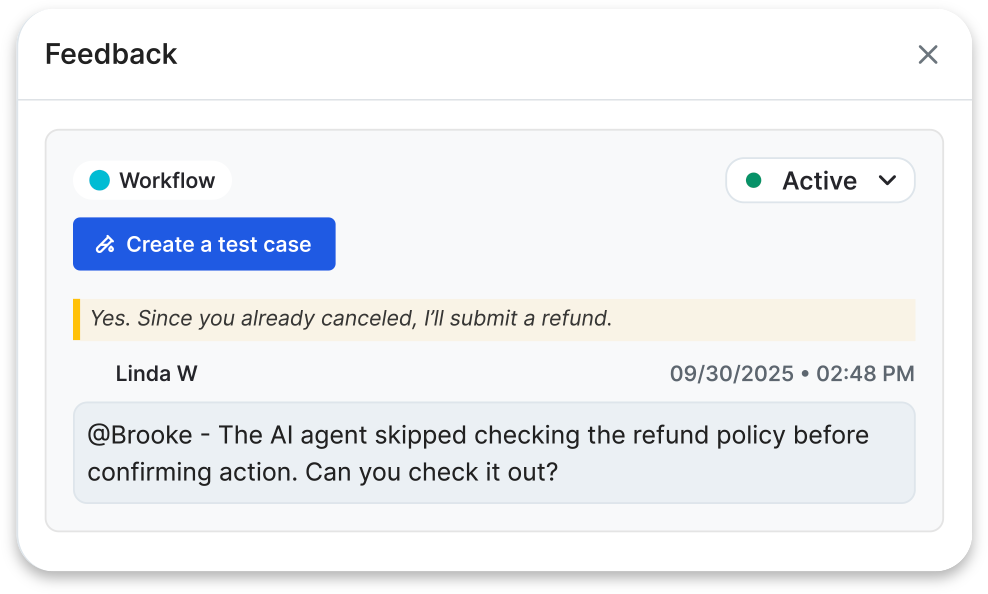

In-Product Feedback Loop & One-Click Test Creation

Teams can flag issues directly in real AI Agent conversations and instantly convert them into test cases, closing the loop between feedback and testing. Test cases can also be created from closed conversations (whether led by a human or AI agent) to capture both erroneous and “golden” interactions. These become part of regular regression checks, speeding the feedback-to-fix cycle and ensuring that the AI Agent consistently delivers the right response.

Why Automated Testing Matters

Automated testing enables faster rollouts, reduces the need for heavy manual testing, and delivers the control, observability, and safeguards enterprises need to deploy AI Agents with confidence.

With these capabilities, enterprises see measurable gains in both scale and risk reduction:

- 30% improvement in test coverage over human testing

- 10-15x more tests executed

- 25-35% faster release cycles.

At the same time, before they reach production, companies also benefit from:

- 20-30% fewer regressions

- 15-20% fewer customer-facing errors

- 3-5x more edge cases surfaced

Most importantly, it addresses one of the biggest barriers to AI adoption: trust. Cresta’s Automated AI Agent Testing suite empowers leaders to deploy with confidence, knowing every update is safe to ship and every interaction meets the highest standards of quality, compliance, and customer care.

Confidence in Every Conversation

With this launch, Cresta continues to deliver on its vision of enterprise-grade AI agents that are reliable, compliant, and efficient. Automated AI Agent Testing ensures that every release is production-ready, every update is validated, and every customer interaction earns trust.

To learn more or see the Automated AI Agent Testing suite in action, join us for a live demo webinar or schedule a demo with us today.