The enterprise contact center is global, yet human-to-human communication at scale remains constrained by language. For organizations serving an international customer base, delivering instantaneous, high-quality, and natural multilingual conversation is not merely a feature—it’s a foundational requirement for customer experience.

At Cresta, we view this challenge as more than a mere chaining of components. Building a truly real-time translator requires engineering a synchronized, high-fidelity language intelligence layer where speech detection, translation, and synthesis work in concert.

This is a deep dive into the architectural trade-offs, system design decisions, and optimizations that make real-time, production-grade translation viable.

The Challenge of Real-Time Multilingual Conversation

Achieving a natural, lag-free voice translation experience in a live conversation pushes the limits of modern AI and systems engineering. The core problem is synchronization: we must translate human speech, with all its context, emotional tone, and natural rhythm, across languages while maintaining end-to-end latency below the human-tolerable threshold.

Latency is the Ultimate Metric

For a conversation to feel natural, delay must be nearly imperceptible. This is a quantifiable engineering constraint:

- Human-Tolerable Latency: A real-time experience is generally defined by an end-to-end latency of 500–1000 ms. Once the delay exceeds this range, users perceive an awkward, turn-taking cadence; the system, not the humans, dictates the pace of conversation.

- The Pipeline Dependency: The entire system’s reliability hinges on the performance of its weakest link. Translation accuracy is highly dependent on transcription performance. If the Speech-to-Text (STT) layer incorrectly transcribes a sentence, no amount of Machine Translation (MT) or Text-to-Speech (TTS) optimization can recover the conversational quality. The entire pipeline is highly interdependent.

Balancing Stability and Speed

A key architectural trade-off emerges between a stable, high-latency pipeline and a dynamic, low-latency one.

Our engineering focus is on the Dynamic, Low Latency approach, which requires meticulous optimization across all stages to balance speed with overall output quality.

Optimizing the Multilingual Intelligence Stack

Our Real-Time Translator (RTT) pipeline is a streaming system, architected to minimize latency by ensuring that each component begins processing data before the previous component has completed its full task.

The Real-Time Translation Pipeline

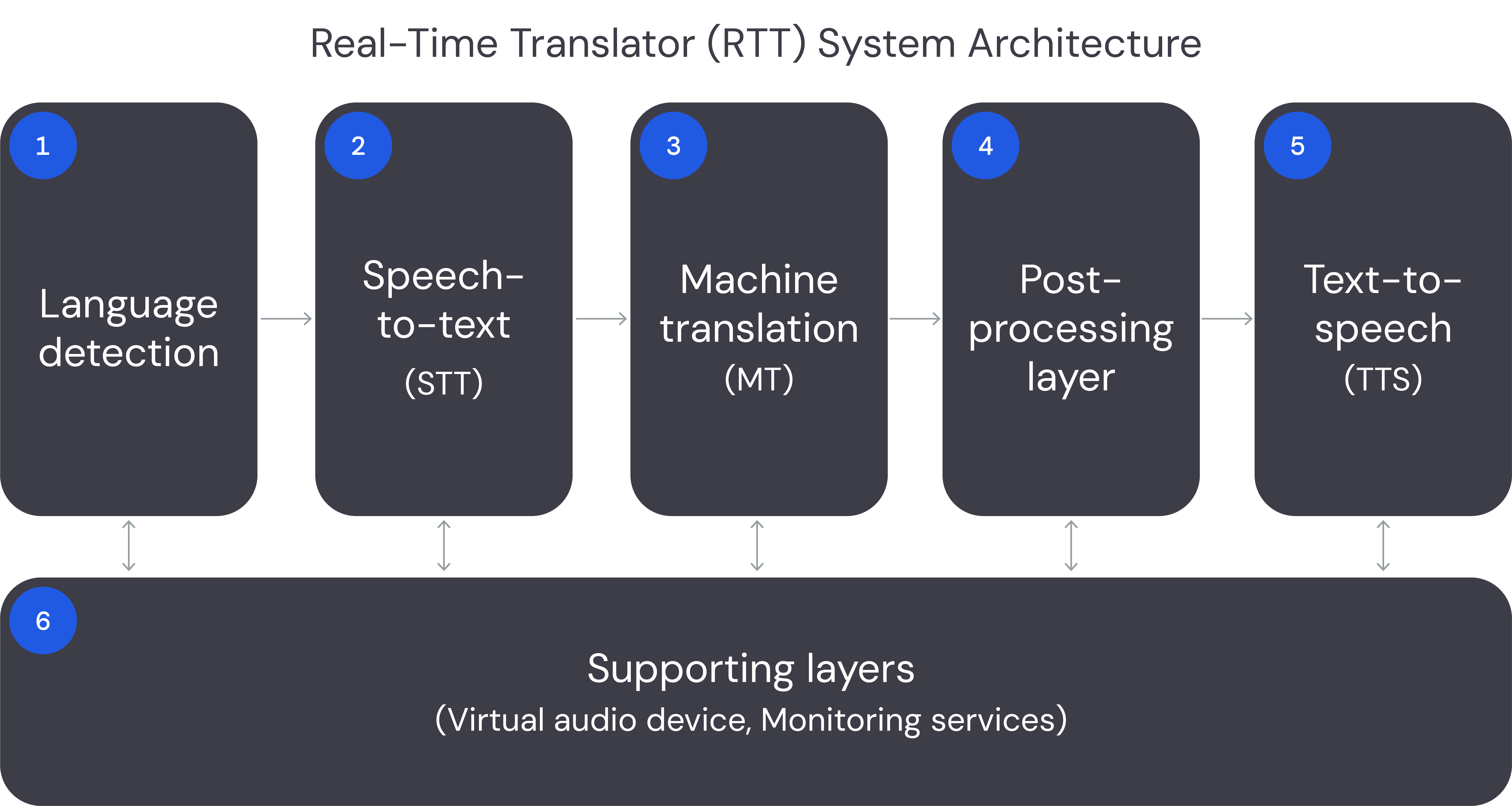

The RTT pipeline is a series of tightly connected, high-throughput components:

- Language Detection: Identifies the speaker’s language in-stream and routes the audio to the appropriate STT model configuration.

- Speech-to-Text (STT): Uses a highly optimized model (e.g., Deepgram) to convert raw speech audio into text transcripts. This component is the accuracy backbone.

- Machine Translation (MT): The core translation layer.

- Post-Processing Layer: Cleans the translated text, handling essential tasks like numeral formatting (for example, converting September twentieth, nineteen seventies to September 20, 1970 when transcribing dates), PII redaction, and conversational context/turn management.

- Text-to-Speech (TTS): Generates translated audio (e.g., Cartesia, 11labs) for playback.

- Supporting Layers: Includes a Virtual Audio Device for seamless integration with CCaaS platforms and robust Monitoring Services that continuously measure quality and delay.

Fine-Tuning and Ensemble Evaluation

We achieve optimal performance through a rigorous discipline of fine-tuning, selection, and continuous benchmarking for every component.

STT: The Foundation of Accuracy

As the blog post "Why Transcription Performance is Holding Back Your AI Strategy" highlights, poor transcription is an unrecoverable error. We adapt transcription models to specific domain acoustics, accents, and the noisy reality of contact center audio.

MT: The Quality/Latency Trade-Off

Our selection process for the translation layer is guided by a unique ensemble evaluation approach. We select the best engine (classic MT or an LLM) for each language pair by weighing multiple factors:

- Statistical Metrics: Using established metrics like COMET to provide a baseline statistical signal for translation accuracy.

- Preference Metrics: Employing LLM-Judge based evaluation to capture what matters in translating contact center conversations. These metrics include keyword translation accuracy ensuring that customer information and entities are accurately conveyed, as well as tone preservations evaluating the speech style of agents—metrics a simple BLEU score cannot capture precisely.

- Performance Metrics: Crucially, evaluating latency and cost for each model option.

This framework allows us to objectively choose between, for instance, a classic MT model (faster, lower cost) and an LLM translator (higher potential quality, but with added token-by-token generation delay), ensuring the best possible quality-performance trade-off for the enterprise.

TTS: Engineering a Native Voice Experience

While the English voice profiles are mature, human standards for conversational partners are high, especially for non-English languages where subtleties of dialect and prosody are critical. The quality of the TTS output must pass a rigorous, native-speaker-validated quality assurance process.

For each non-English language, we conduct exhaustive testing on voice profiles. This involves internal R&D efforts that systematically review the quality of potential TTS profiles, guided by a rigorous evaluation guideline that measures the synthesized voice along three critical dimensions:

This meticulous curation, validated by native speakers, ensures the synthesized speech is not merely intelligible, but engaging and professionally appropriate. Our long-term aim is to support more custom-defined voice experiences, where brand-specific or regionally preferred voice profiles can be integrated and maintained with quality rigorously proved by Cresta’s evaluation framework.

Dynamic Stability in Multilingual Contexts

To ensure stability when speakers naturally switch languages mid-conversation, our primary approach is to leverage multilingual STT models.

For language-pairs where multilingual model accuracy drops (e.g., Korean or Chinese), we experiment with a dual-mode architecture. This separates each speaker’s channel and runs dedicated, highly-optimized STT instances. This adaptation ensures dynamic switching without needing to reinitialize sessions or interrupt the audio stream, maintaining continuity while optimizing both accuracy and first-token latency.

Engineering for Latency and Continuity

Latency is the final, critical engineering battleground. To keep the end-to-end delay below the 1000ms threshold, we employ aggressive, highly optimized strategies at every stage.

Key Latency Contributors and Optimization Levers

The Role of Shadow Voice Buffering

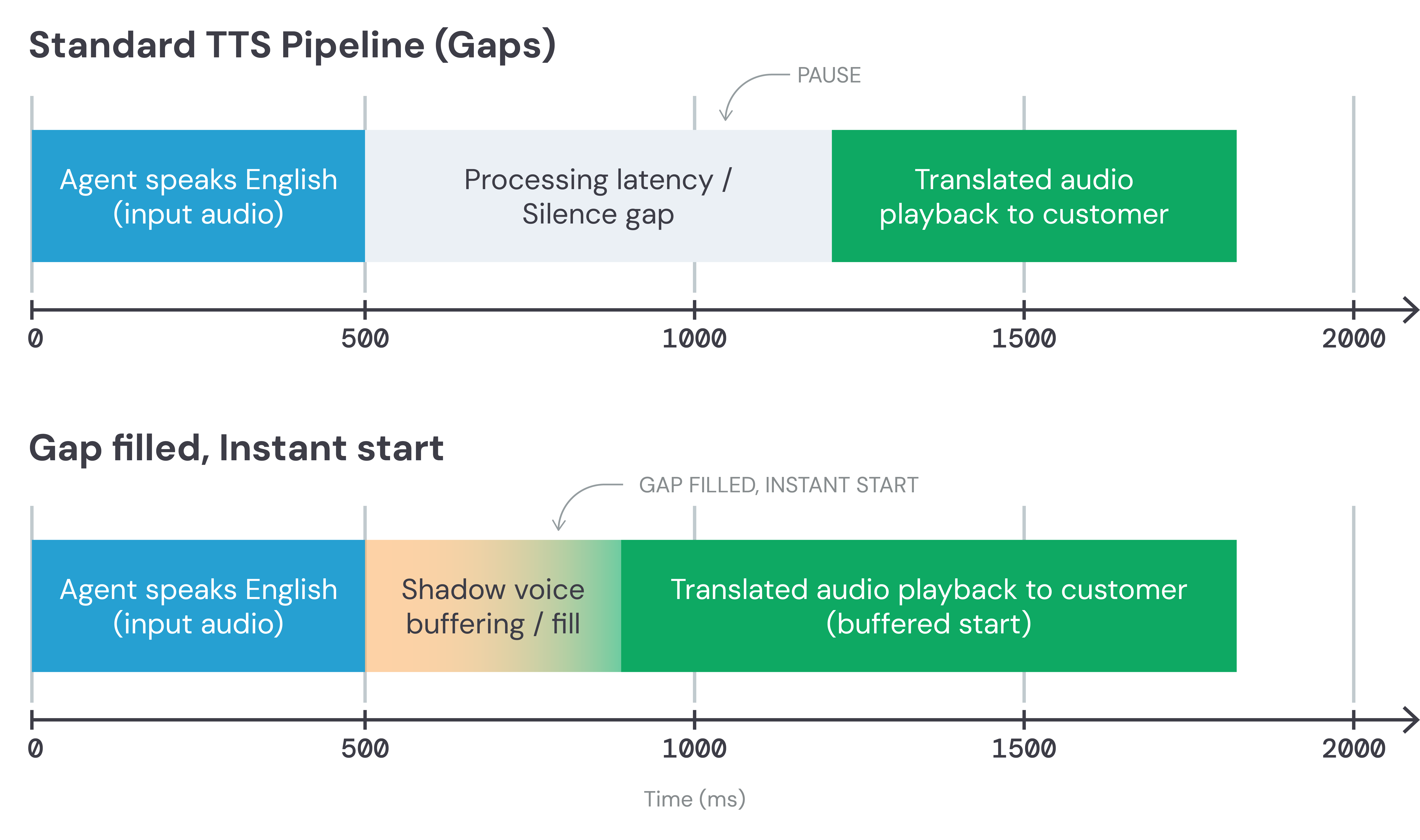

A particularly effective strategy to preserve conversational continuity is the use of Shadow Voice.

While the TTS engine is generating the translated audio bytes, there is an inherent, albeit small, delay. Shadow Voice is a mechanism that fills these brief silence gaps.

By judiciously buffering and using a subtle, pre-generated audio bridge during the TTS synthesis period, we prevent the conversation from sounding punctuated and awkward. This technique ensures playback begins almost instantly while maintaining a continuous, natural flow, masking the underlying generation latency from the end-user.

Looking Ahead: Real-Time Multilingual Collaboration

Our work on RTT is not a static endpoint; it is the foundation of a deeper Language Intelligence layer that will enable a new generation of global conversational AI.

We are actively exploring several key areas to push the boundaries of real-time intelligence:

- LLM-based "Healing": Using large language models to correct and "heal" imperfect transcriptions before they are sent to the translation layer, minimizing downstream errors.

- Expressive TTS: Integrating emotional and expressive TTS to convey non-verbal cues and tone accurately, moving beyond a purely textual representation of meaning.

- Speaker Voice Preservation: Researching methods to maintain the original speaker's voice identity across languages, enhancing the personal connection.

- Latency Reduction: Leveraging lighter and better multilingual models and caching common replies to achieve further reductions in overall pipeline latency.

The open research question remains how to seamlessly maintain conversational flow across languages: managing implied context, shared assumptions, and cultural nuances that are not explicitly stated.

The shift from a demonstration to a production-grade, enterprise-scale real-time translator demands a comprehensive, engineering-first approach. By continuously balancing accuracy, latency, and continuity through optimized streaming architectures and rigorous evaluation frameworks, we are building the infrastructure for a truly global, lag-free human conversation.